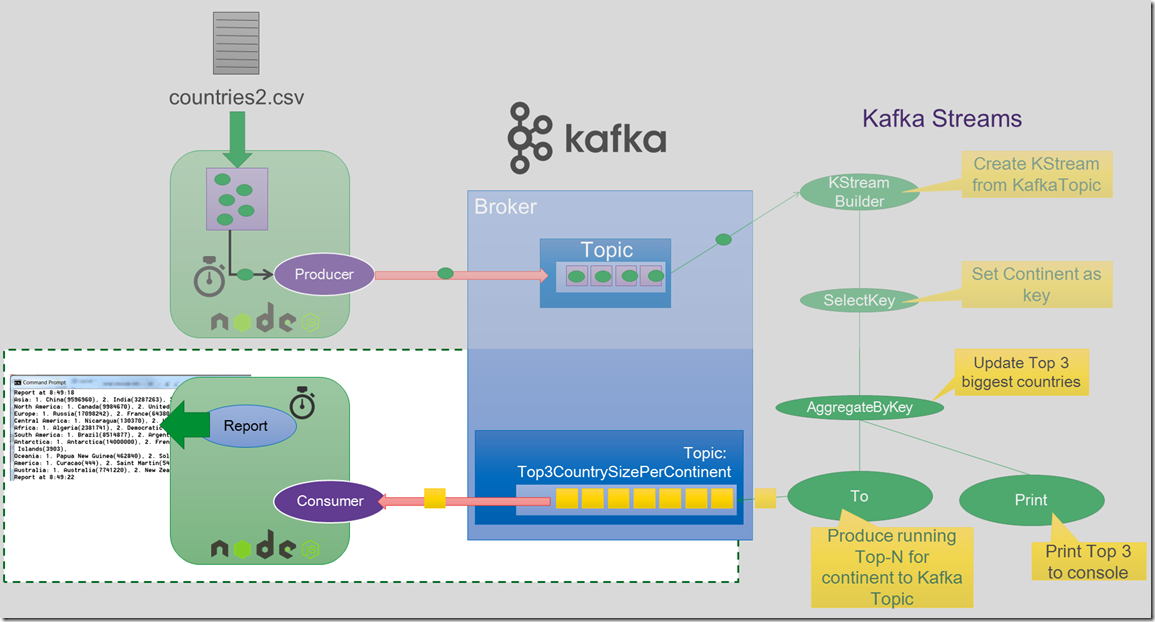

Provide all configurations in config.js as shown.Please refer producer.js and consumer.js to use this module.It combines messaging, storage, and stream processing to allow storage and. Create schemas in schema registry using API's specified here This is the second post in the NodeJS Microservices with NestJS, Kafka, and Kubernetes series, and we’ll build up the second application called microservices. Kafka is primarily used to build real-time streaming data pipelines and applications that adapt to the data streams.Run the docker-compose.yml file to run schema-registry,zookeeper and kafka (optional) (make sure to replace localhost with your IP).Kafka Topics Kafka is about publishing and subscribing to topics. Given that our Kafka service is running on port 9092, we can easily create a Client to connect to our service.

NODEJS KAFKA INSTALL

Install the module using NPM: npm install kafka-avro-nodejs -save Connect to Kafka from NodeJS We’ll make use of an Open Source SDK called kafka-node to connect to Kafka. Installįor Ubuntu 16.04 and above please follow the below step before installing the module: sudo apt install librdkafka-dev Confluent Kafka Nodejs With Code Examples In this lesson, well use programming to try to solve the Confluent Kafka Nodejs puzzle. The kafka-avro-nodejs library is a wrapper that combines the kafka-avro, node-rdkafka and avsc libraries to allow for Production and Consumption of messages on kafka validated and serialized by Avro. To install a Node.js application and publish and install packages to and from a public or private Node Package Manager registry, you must install Node.js and the npm command-line interface using either a Node version manager or a Node installer. In this lightning talk we will review some basic principles of event based systems using NodeJS and Kafka and get insights of real mission critical use. This module is used for implementing kafka-avro using node.js Deploy an example Node.js application on OpenShift.

0 kommentar(er)

0 kommentar(er)